HHS Is Making an AI Tool to Create Hypotheses About Vaccine Injury Claims

Summary

The US Department of Health and Human Services is building a generative AI to analyse reports in the national vaccine adverse‑event database (VAERS) and to generate hypotheses about potential vaccine injuries. The tool, in development since late 2023 and not yet deployed, would surface patterns in noisy, unverified VAERS submissions — a dataset experts say is useful for generating leads but not for proving causation.

Key Points

- HHS is developing a generative‑AI system to scan VAERS data and propose hypotheses about adverse events following vaccination.

- Experts warn VAERS is an unverified, denomiator‑less, noisy reporting system; it can suggest signals but cannot prove causation.

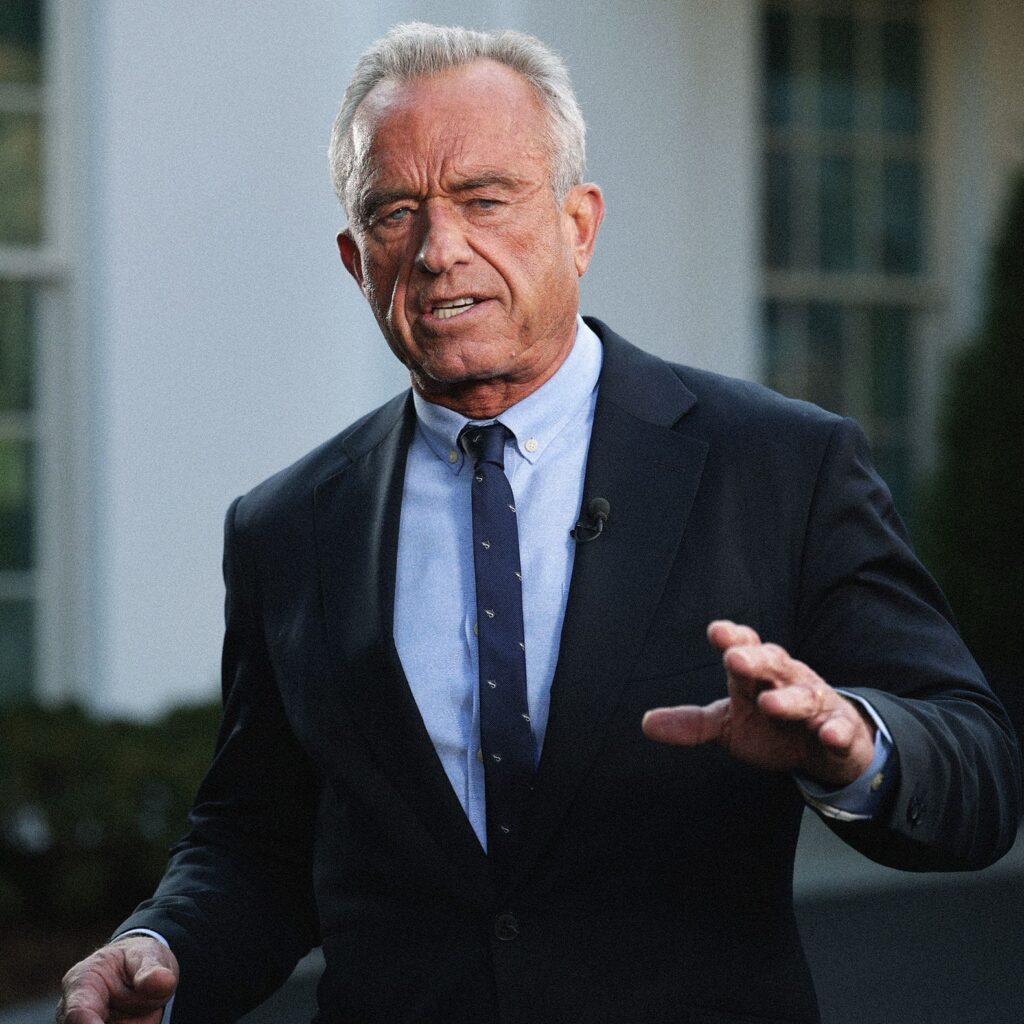

- There are concerns the system could be used politically — notably by HHS Secretary Robert F. Kennedy Jr., a long‑time vaccine critic who has already changed vaccine recommendations.

- Large language models can spot patterns but also hallucinate; researchers stress the need for epidemiological follow‑up, proper data pairing and human review.

- Staff reductions at CDC and recent controversial internal proposals on vaccine regulation raise questions about whether there is capacity and independence to investigate AI‑generated leads properly.

Content summary

The article explains that the AI use case appears in an HHS inventory of AI projects from 2025. VAERS — jointly managed by the CDC and FDA — accepts unverified reports from anyone, so it’s historically been treated as hypothesis‑generating rather than definitive. Scientists quoted include Paul Offit, who calls VAERS “noisy,” Leslie Lenert, who notes government groups already use NLP on VAERS and that LLMs are a natural next step, and Jesse Goodman, who emphasises the need for skilled human follow‑through. The piece also cites recent policy moves and internal controversies at HHS and FDA that increase worry this tool could be applied in ways that shift vaccine policy or be misinterpreted by non‑experts. The article notes VAERS has in the past helped flag genuine rare issues (J&J clotting, myocarditis), but stresses that any AI leads require rigorous epidemiology to confirm risk.

Context and relevance

This story sits at the intersection of AI governance, public‑health surveillance and political influence. It matters because a government using generative AI to mine a messy public safety database could speed detection of real signals — but it could also produce misleading claims that feed misinformation or policy changes if human oversight and proper epidemiological methods are lacking. With ongoing debates over vaccine policy and cuts to public‑health staffing, the balance between faster detection and responsible interpretation is especially fragile.

Why should I read this?

Short version: if you care about vaccines, public health or how governments use AI, this is one to skim. It flags a real tension — AI can speed up spotting odd patterns, but VAERS is noisy and LLMs can hallucinate, so any claim needs proper follow‑up. Also, given the political stakes around vaccine policy, how HHS uses this tool could shift public debate. We’ve read it so you don’t have to dig through the inventory — worth a look.